In brief

Accomplish is operationalising agentic AI while maintaining an ISO 27001-standard control environment. Deploying agentic AI without rigorous security poses significant risks, including prompt injection, policy drift, and access failures. Therefore, this article argues that firms should develop new agentic AI and information security measures in parallel. It concludes by inviting you to contribute to a public consultation on Accomplish’s Agentic AI Risk Control Framework that addresses these through end-to-end risk management. The consultation will commence in September.

Why spend time on information security when there are more exciting developments at the moment?

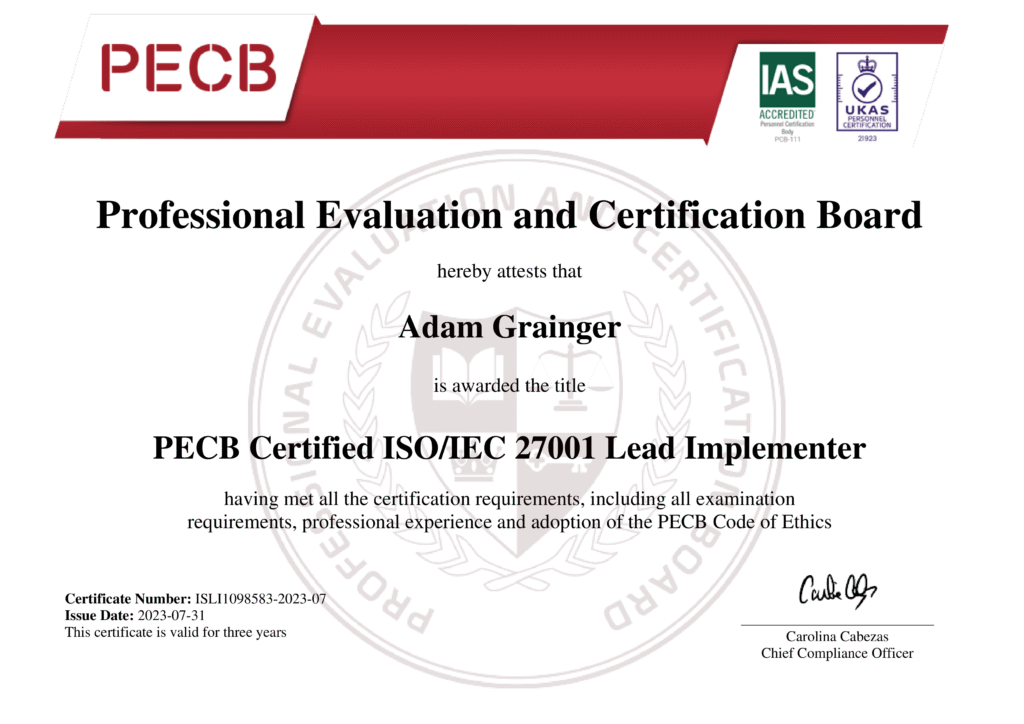

Let me be the first to point out the lack of fashion sense of my recertification last week as a lead implementer for the international standard in information security (ISO/IEC 27001) in the middle of an AI boom.

So, why is this important to us at Accomplish?

Here are the top three reasons we stay focused on both agentic AI and information security. The third one is the most important.

1. There is no trade-off between agentic AI and information security – they must go hand in hand

First, there is no trade-off – quite the opposite.

In recent months, Accomplish has introduced the first batch of orchestration agents (systems that can take actions autonomously toward goals) into production, and they work, which, according to multiple sources, places us in the minority.

“Slow is smooth and smooth is fast.” Guided by this approach, our agentic transformation first built a new agent business case process, a high-level risk identifier, an agent build and test procedure, and a modular Agentic AI Risk Control Framework. Without these, I’m sure we would have lost time and money due to avoidable errors, slower efficiency savings, and audit stress.

For our CX benchmarking clients, we have developed Fetch – a CX Data Collection Agent that enables the secure calculation of CX metrics from existing systems, eliminating the need for manual uploads, while maintaining data segregation and audit trails.

- Fetch saves asset managers days of work every quarter.

- Strengthens their data foundations.

- Lets them discover where their CX helps or hurts their bottom line.

And it applies our data security protocols, including encrypted transfer, anonymised processing, and strict access controls, exposing no data at any stage.

So, to me, continued certification demonstrates that, alongside these innovations, we have also maintained our focus on our top priority, which is the integrity, confidentiality, and availability of our clients’ and our own information.

2. Deploying agentic AI prematurely and causing an incident will damage trust and credibility, so strengthen your ISO 27001 information security simultaneously

Second, as you automate decisions and workflows with agentic AI, you will create new security risks. Bad actors can trick agents by injecting malicious prompts, creating policy drift, or ‘poisoning’ an agent’s memory. APIs may be insecure, and poorly designed agentic credentials can create access control issues that risk data loss and collateral damage.

To be sure, this isn’t theory: the UK’s AI Security Institute’s red teams were recently able to induce most of the leading-edge agents they tested to act in ways that contravened policies (see AISI’s Security Challenges in AI Agent Deployment, July 2025). If they can subvert agents, others can too, so at the same time as adopting this exciting new technology, we must also strengthen our defences.

Because of this, developing new protections against these emerging risks needs to be central to your operational rollout of agentic AI. Specifically, you will need to manage risks end-to-end, from design and training to testing and ongoing monitoring.

In the middle of the AI boom, I see how calling for holistic agentic AI risk management will stimulate yawns around the world. But deploying agentic AI prematurely and causing an incident could severely damage trust and credibility.

That’s why, at Accomplish, we have integrated AI governance into our existing controls environment. All agents are subject to our internal governance process, which includes risk reviews, change control, and periodic performance audits. Our framework also incorporates trust-building risk controls, such as verifiable memory logs, boundaries to delegated actions, and automated audit checkpoints.

3. Security is a trust signal. AI without it may become a risk signal.

Third and most important, ours is a long-term bet on the value of protecting our information assets and those that our clients trust us with.

Ransomware, data theft, and business interruption are already known, measurable, regulated, heavily litigated, and monetarily material threats. What will happen when a bad actor spoils the party by taking charge of your cool new swarm of agents?

So, while clients, partners, and insurers may be impressed by AI experiments, they will walk away if you fail a security audit. Note: Accomplish has a 100% success rate for our clients’ security audits.

Next step: leverage our Agentic AI Risk Control Framework

To conclude, as we look forward to our next information security external audit (which will be our first to include agentic AI), here are three cheers for the painstaking, unfashionable, and never-ending tasks of threat detection, patching cycles, and identity management.

Footnote: We have published this article not because we believe we have solved agentic risk management, but because we believe everyone still has so much to learn about agentic AI and information security. So, while our Agentic AI Risk Control Framework aligns with others like the NIST AI RMF and the EU AI Act, almost every day, agentic AI makes a new development that we run through the framework to make sure our defences remain fit-for-purpose.

We’ve published this article, therefore, to connect with others who are also addressing these challenges. Specifically, we invite clients, partners, and peers working with agentic AI to review and contribute to our public consultation on the Framework, which we will initiate in September.

Lastly, if you’re also considering participating in the CX Benchmark, we’d be happy to discuss how our information security approach ensures your data stays protected throughout.

Published: 8 August 2025 by Adam Grainger, Managing Director, Accomplish.

Frequently asked questions

1. What is agentic AI, and why is it a security risk?

Agentic AI refers to systems that can take actions toward goals without constant human oversight. These systems create new security risks such as memory poisoning, prompt injection, and uncontrolled decision loops.

2. How does Accomplish secure data in the CX Benchmark?

We apply ISO/IEC 27001-standard controls, including encrypted data transfer, anonymised processing, full audit trails, and strict access permissions. We expose no data at any stage.

3. What is the Agentic AI Risk Control Framework?

The Agentic AI Risk Control Framework is a structured, ISO-aligned framework developed by Accomplish to identify, mitigate, and monitor risks introduced by autonomous agents, especially in regulated environments like financial services.